City College, Fall 2019

Intro to Data Science

Week 3: Processing and Cleaning Data

September 16, 2019Today's Agenda

- Why Data Cleaning is Important

- Elements of the ETL Process

- Processing Tools

- Handling Missing Data

Week 2 Recap

- Structured vs. Unstructured Data

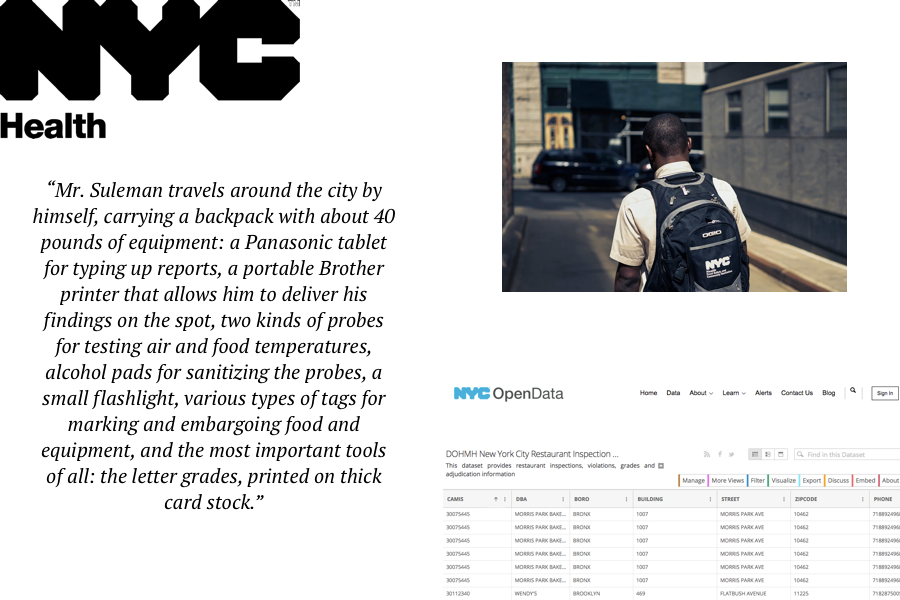

- Where Data Comes From: DBs, APIs, Flat Files, Web Scraping

- Sources of Data: Government, Private Firms, Personal

- U.S. Census Data

Assignment 2 Recap

- How was DataCamp?

- Grading and code

- Don't worry about syntax.

- Know how to use Python & Pandas.

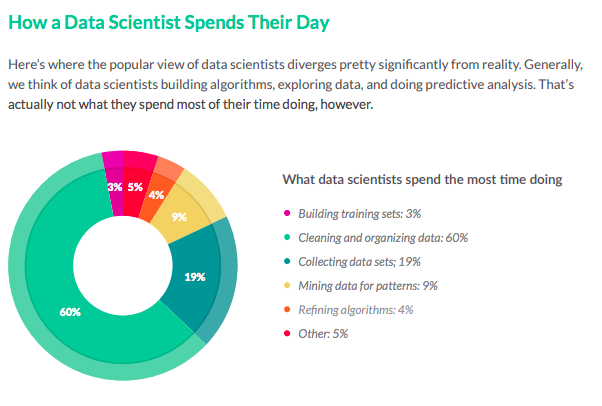

Source: CrowdFlower Data Science Report, 2016

Kaggle founder and CEO Anthony Goldbloom:

80 percent of data science is cleaning the data and 20 percent is complaining about cleaning the data

ETL

- Broadly: gathering input data from source(s)

- Practically: capturing and recording events

- Broadly: convert raw event data to usable form; enforce data model

- Practically: applying rules and functions to extracted data

- Rules: split, group, drop

- Transformations: map, apply, normalize

- Broadly: Push transformed data to datastore

- Practically: Publish data in usable form

- Database, flat file, api

- Dashboard, table, report

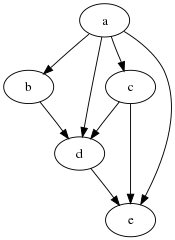

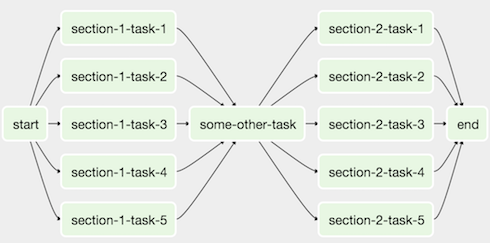

Representating ETL Pipelines

Directed Acyclic Graph (DAG): a graph finitely many edges and verices and edges with each edge directed from one vertex to another, such that there is no way to start at any vertex v and follow a consistently-directed sequence of edges that eventually loops back to v again.

This Can Get Complex

ETL Tools

Bash Scripts

Airflow and other data pipeline tools are organized around the concept of a DAG. A DAG – or a Directed Acyclic Graph – is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies.

Airflow Components

- Operator: describes a single task in a workflow. Operators are usually (but not always) atomic, meaning they can stand on their own and don’t need to share resources with any other operators. Examples: BashOperator, PythonOperator, etc.

- Task: a parameterized instance of an operator.

- Sensor: a type of special operator which will only execute if a certain condition is met.

- Pools: limits on the execution parallelism of arbitrary sets of tasks. (Prevents overwhelming resources.)

Lyft, Robinhood, and Zillow are among the many companies who have adopted Airflow to help manage their ETL processes.

ETL Process Stakeholders

Handling Missing Values

- Drop observations with missing fields.

- Impute values:

- Central tendancy: median, mean, mode.

- Modeled value.

We'll touch on this throughout the rest of the course.

Handling Outliers

- Drop.

- Trim.

- Be mindful.

We'll touch on this throughout the rest of the course.

Normalizing Data

- Adjust range.

- Adjust scale.

We'll touch on this throughout the rest of the course.

Wrap Up

- Why Data Cleaning is Important

- Elements of the ETL Process

- Processing Tools

- Handling Missing Values

Assignment 3: Due Monday, September 23 by 6:30pm

DataCamp's Statistical Thinking in Python (Part 1)

- The course should appear as assignment within your existing DataCamp account.

- Course takes ~3 hours, plan your time accordingly.