City College, Spring 2019

Intro to Data Science

Week 3: Processing and Cleaning Data

February 11, 2019Today's Agenda

- Why Data Cleaning is Important

- Elements of the ETL Process

- Processing Tools

- Handling Missing Data

Week 2 Recap

- Structured vs. Unstructured Data

- Where Data Comes From: DBs, APIs, Flat Files, Web Scraping

- Sources of Data: Government, Private Firms, Personal

- U.S. Census Data

Assignment 2 Recap

- How was DataCamp?

- Grading and code

- Don't worry about syntax.

- Know how to use Python & Pandas.

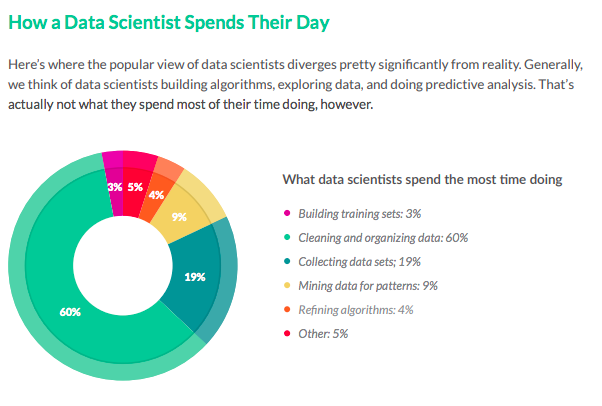

Source: CrowdFlower Data Science Report, 2016

Kaggle founder and CEO Anthony Goldbloom:

80 percent of data science is cleaning the data and 20 percent is complaining about cleaning the data

ETL

- Broadly: gathering input data from source(s)

- Practically: capturing and recording events

- Broadly: convert raw event data to usable form; enforce data model

- Practically: applying rules and functions to extracted data

- Rules: split, group, drop

- Transformations: map, apply, normalize

- Broadly: Push transformed data to datastore

- Practically: Publish data in usable form

- Database, flat file, api

- Dashboard, table, report

This Can Get Complex

ETL Tools

Bash Scripts

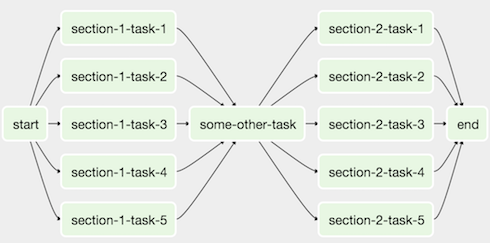

Airflow and other data pipeline tools are organized around the concept of a DAG. A DAG – or a Directed Acyclic Graph – is a collection of all the tasks you want to run, organized in a way that reflects their relationships and dependencies.

Airflow Components

- Operator: describes a single task in a workflow. Operators are usually (but not always) atomic, meaning they can stand on their own and don’t need to share resources with any other operators. Examples: BashOperator, PythonOperator, etc.

- Task: a parameterized instance of an operator.

- Sensor: a type of special operator which will only execute if a certain condition is met.

- Pools: limits on the execution parallelism of arbitrary sets of tasks. (Prevents overwhelming resources.)

Lyft, Robinhood, and Zillow are among the many companies who have adopted Airflow to help manage their ETL processes.

ETL Process Stakeholders

Handling Missing Values

- Drop observations with missing fields.

- Impute values:

- Central tendancy: median, mean, mode.

- Modeled value.

We'll touch on this throughout the rest of the course.

Handling Outliers

- Drop.

- Trim.

- Be mindful.

We'll touch on this throughout the rest of the course.

Normalizing Data

- Adjust range.

- Adjust scale.

We'll touch on this throughout the rest of the course.

Wrap Up

- Why Data Cleaning is Important

- Elements of the ETL Process

- Processing Tools

- Handling Missing Values

Project: Components

- Apply Python to load, clean, and process data sets.

- Identify key elements of and patterns in your data set using computational analysis and statistical methods.

- Apply principles of statistical modeling and machine learning to your data.

- Explain, visualize, and communicate empirical findings within your analysis.

- Demonstrate effective team collaboration

Project: Teams

- Three to four people per group.

- Requests to work together will be honored to the extent practical.

- Contributions to the team's efforts, as measured by GitHub commits and peer review, will be a significant portion of the project grade.

Project: Data Resources

- Kaggle Datasets

- Data.gov

- Google Dataset Search

- This list, and other lists on the internet

- (Legal, Responsible, Ethical) Web Scraping!

Be Creative

Project: Key Dates (Tentative)

- Project Teams Formed, February 25.

- Project Proposals Due via Email, March 18.

- Project Update, April 29.

- Projects Due, May 13.

Be Creative

Assignment 3: Due Monday, February 25 by 6:30pm

DataCamp's Statistical Thinking in Python (Part 1)

- The course should appear as assignment within your existing DataCamp account.

- Course takes ~3 hours, plan your time accordingly.

Optional: Due Thursday, February 21 by 11:59pm

Email me your preferred project teammates.